Why Integrated FEED Is the Control Point Your Greenfield Project Can’t Ignore

Key Points at a Glance

Digitalization and decarbonization often move forward as separate efforts, but when they don’t align, one ends up slowing down the other. This blog breaks down why treating them as a connected strategy is essential and how Integrated FEED helps create that alignment from the start. It also outlines what integrated readiness looks like and how a structured assessment helps plant leaders understand their true starting point before making their next investment.

Greenfield projects succeed when the front end is tight, connected, and disciplined. The faster teams lock scope, align on execution, and settle the digital and operational requirements, the smoother everything else runs. Owners know this, yet many still treat FEED as a technical box to tick instead of the control point that shapes the entire investment. And the gap between a promising concept and a successfully commissioned plant is where billions of dollars—and countless project timelines—could disappear.

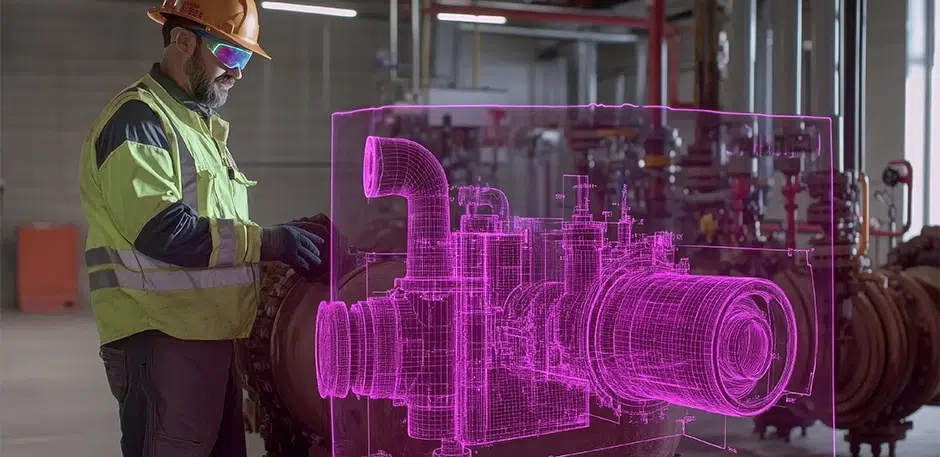

In simple words, Integrated FEED brings process design, construction logic, procurement strategy, automation planning, cybersecurity, and commissioning into one workflow. Decisions land once, interfaces stay clean, costs stop drifting, schedules stop slipping, and the plant that shows up at commissioning actually reflects the business case that justified the project in the first place.

An integrated FEED is how you avoid late redesigns, weeks lost to rework, and the unplanned spend that keeps showing up in audits long after startup.

What integrated FEED actually means

Front End Engineering Design sits between concept and detailed engineering. It translates business case and constraints into a frozen scope, basis of design, class-3 estimates, execution strategy, and a commissioning plan that is credible and testable. In an integrated model, FEED is not just process and piping. It is the place where engineering, procurement, construction, operations, and controls converge to make tradeoffs visible and auditable. (Source: Uniteltech)

Two proven scaffolds help keep FEED honest:

- FEL and stage gates: Mature owners benchmark FEED quality using FEL indices and tools like CII’s PDRI to quantify definition before funding.

- AWP by design: Advanced Work Packaging (AWP) starts in the front end, not a month before mobilization. Breaking scope into construction-driven packages during FEED de-risks access, laydown, module logic, and path of construction.

The six decisions FEED must lock with evidence

1. Value case and operating context

Translate business objectives into measurable performance targets. Throughput, energy intensity, emissions, operability, maintainability, cybersecurity posture, and staffing profiles all belong in the FEED KPIs and acceptance criteria. Use CII front end planning rules to tie each target back to scope and strategy.

2. Process and technology choices

Run technology alternatives through lifecycle economics, constructability, and utilities balance, not just nameplate capacity. The FEED package should capture why the selected process wins on total installed cost, schedule risk, operations readiness, and digital maintainability.

3. Execution and contracting strategy

Choose where to place interface risk. Package strategy, market sounding, and the change-management model must be designed together. AWP during FEED informs package splits that line up with the planned path of construction and site logistics.

4. Digital thread and model-based delivery

Treat the 3D model and the data behind it as contract deliverables. Use BIM information management practices such as ISO 19650 to define information requirements, model federation, naming, approval workflows, and turnover formats. Your commissioning team will thank you.

5. Controls, connectivity, and cybersecurity by design

Bake in ISA/IEC 62443 requirements during FEED. Define zones and conduits, supplier secure-development expectations, hardening baselines, and test plans, since it is cheaper to specify it now than to retrofit after SAT.

6. Commissioning and operations readiness

Write the commissioning strategy in FEED, and not after detailed design starts. Sequence turnovers, punchlist philosophy, digital walkdowns, and performance test criteria should be locked with the same rigor as process guarantees.

The integrated FEED playbook

Here is a practical, end-to-end approach you can apply on the next greenfield.

1. Start with measurable definition

- Build the FEED plan around CII’s front end planning principles and run a baseline PDRI to quantify definition gaps. Re-score at each gate and publish the trend.

- Align your cost estimate to AACE classes. Move from class-4 or class-5 in early concept to class-3 at FEED exit with documented basis and risk ranges.

2. Connect model, estimate, and schedule

- Tie the 3D model, line list, cable list, and equipment data to the estimate structure and schedule coding. This makes quantity growth and design churn visible in near real time and helps procurement time the market.

3. Design for constructability and the path of construction

- Use AWP to define Construction Work Areas and Engineering Work Packages in FEED. Let the path of construction drive module splits, laydown sizing, heavy-lift plans, and temporary works.

4. Engineer the digital plant, not just the physical plant

- Define the operational information model, tag standards, and the interfaces that will carry deterministic information exchange between OT and IT. If you use OPC UA, specify profiles, security modes, and certificate handling now so vendors design to the same playbook. Fold this into your industrial automation services scope and FAT protocols.

- Mandate information handover aligned to ISO 19650, including asset registers, loop folders, cause-and-effect matrices, and calibration data in open, machine-readable formats.

5. Secure by architecture

- Apply ISA/IEC 62443 to zone critical assets, define security levels, and allocate requirements to vendors. Include secure development for devices and software, hardening baselines, and role-based access in the FEED specification and the vendor datasheets.

6. Make commissioning part of design

- Publish system turnover boundaries, completion definitions, and pre-commissioning test packs with model references. Write performance test procedures and data capture requirements so the plant’s digital history starts at first energization.

7. Close the loop with operations

- Involve operations and maintenance in every tradeoff. Their input on staffing, alarm philosophy, spares strategy, and maintainability changes the layout, not just the manuals.

What this really means for cost, schedule, and risk

When front end planning is performed well, projects show better cost predictability, shorter schedules, and fewer changes. Those gains come from eliminating late scope movement and aligning engineering with the path of construction. The evidence base from CII spans multiple industries and project types, and it is consistent with broader empirical findings on capital projects.

If your board still needs a push, use three proof points during gate reviews:

- Quantified definition. PDRI or an FEL index trend that improves across gates.

- Estimate credibility. AACE class and a clear basis of estimate with risk ranges and contingency rationales.

- Constructability readiness. AWP artifacts from FEED that show path of construction, workface planning logic, and package splits aligned to it.

Governance that makes integration stick

- One integrated FEED manager accountable for scope, estimate, schedule, AWP readiness, and digital handover.

- A living basis of design that captures every key decision, tradeoff rationale, and ripple effect on cost and schedule.

- Gate criteria you cannot wave away anchored on definition metrics, not narrative slides.

- Weekly model-estimate-schedule reconciliations so quantity growth is caught early.

- Weekly model-estimate-schedule reconciliations so quantity growth is caught early.

Commissioning without the scramble

A strong FEED sets up commissioning to run on rails. Clear turnover boundaries reduce baton-drop, and AWP reduces crew stacking. ISO 19650-style information control turns handover from a document chase into a data load, and IEC 62443 design choices shorten the security hardening grind. The result is safer energization, fewer waivers, and a cleaner start of production.(Source: BSI group)

Where to go from here

If you are planning a greenfield in the next twelve months, move three actions to the top of your list:

- Stand up an integrated FEED plan with CII front end planning principles, a PDRI baseline, and AACE class targets by gate

- Launch AWP during FEED and let path of construction drive package strategy and site logistics.

- Lock the digital thread and cybersecurity architecture now using ISO 19650 for information management and IEC 62443 for IACS security.

Greenfield projects will always carry uncertainty. Integrated FEED turns that uncertainty into choices you can see, test, and govern. Done well, it is the difference between explaining overruns and commissioning with confidence.

This kind of discipline is easier to uphold when you work with partners who see the entire lifecycle from engineering through startup. Utthunga brings that perspective through our plant engineering services, combining strong front end practices with the digital and operational depth modern greenfield projects demand.

If you’d like to explore how this applies to your upcoming project, reach out to us and we’ll get the conversation started.