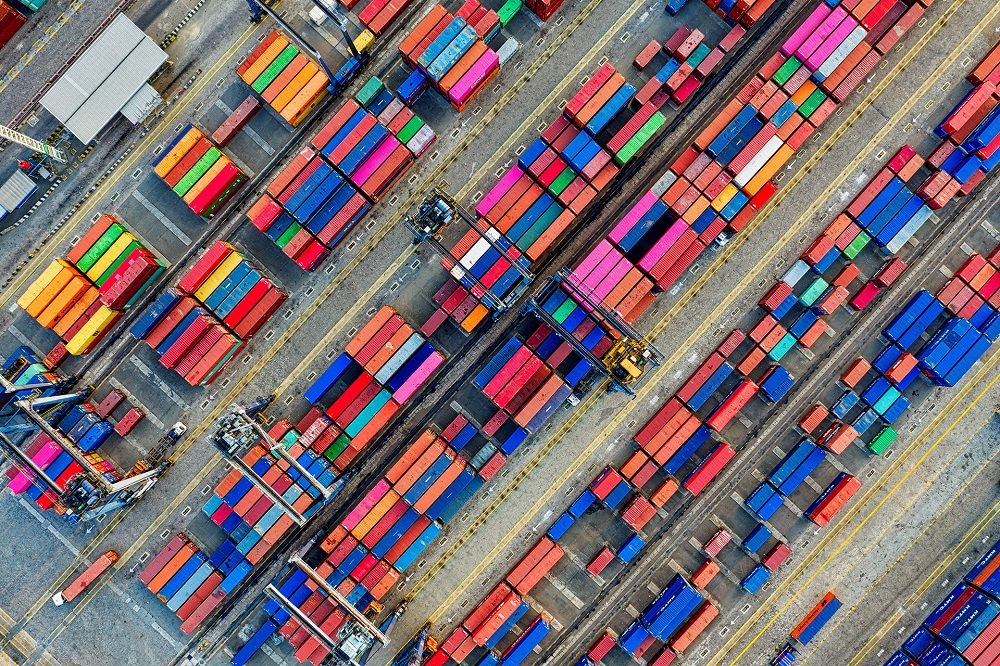

As the Industrial Internet of Things is taking hold, we are seeing more and more desktop/software electronics being used to build smart devices, machines, and equipment for manufacturing OEMs. These devices are the “things” in IIoT and form a connected ecosystem and are at the core of the digital thread.

Desktop/software product development, therefore, holds an important place in the adoption of IIoT. Here selecting a reliable platform is crucial in deciding the overall time to market and overall cost of production and quality. Test automation services and simulations are being widely used in conjunction to produce reliable and stable desktop/software devices.

Simulation refers to the process where a sample device model is simulated to perform under practical conditions, uncover the unknown design interactions, and gain a better perspective of possible glitches. It helps the test automation to streamline the defect identification and fixing process.

An automated testing process includes simulation and testing together to improve the overall efficiency of the desktop/software device. In the current technological epoch, smart test automation is a “smarter” option to create reliable desktop/software devices from the ground up.

Smart Test Automation- a Revolution for Desktop/Software Applications

Industrial automation is at the core of Industrie 4.0. The inclusion of smart devices into the automated industrial network has made many manual work processes easier and more accurate. With the emergence of software-driven desktop/software systems, the industrial automation sector is witnessing a tectonic shift towards a better implementation of IIoT.

As the dependence on these devices increases, desktop/software device testing should not be an afterthought while implementing the big picture. That said, carrying out multiple tests in an IIoT environment where the number of desktop/software systems is increasing can be challenging. To improve the overall accuracy, bespoke smart test automation for desktop/software devices is required.

Smart test automation is the platform wherein the desktop/software devices are tested to understand their design interactions and discover possible glitches in the device operation. This is very important as it ensures that the product does what is expected out of it. This innovative approach has with time, proven to show spectacular results wherein the desktop/software applications work more effectively, thereby improving the overall efficiency of the IIoT systems.

How does Test Automation help to build sound Desktop/Software Devices?

Desktop/software application testing is often misunderstood as software testing. However, both are quite different. Desktop/software product testing involves validation and verification of both hardware and firmware testing. The end goal is to create a desktop/software device that meets the user requirements. Automated desktop/software device testing works well for this purpose, as it involves a lot of iterations, and tests the firmware and hardware requirements. Below are some of the advantages of automated testing, which makes it a class apart from manual testing:

Improved productivity

One cannot deny that manual testing means a highly stressed QA team and higher risks of human errors. Having an automated testing system in place takes the stress off from the QA team to a great extent, as it allows a seamless feedback cycle and better communication between various departments. Also, it facilitates easy maintenance of the automated tests logs. These reasons culminate in a reduced product-to-market time with a highly productive team. A happy workforce and a smooth testing system form the backbone of a quality end product.

Reduced Business Costs

Multiple errors, multiple tests, reruns, all these may seem trivial, but with times they cumulate to increase the business costs. Automated test algorithms help to reduce the business costs, as they are designed to detect failures or glitches in the design in the earlier stages of the desktop/software product development. This means you will require lesser product test reruns as compared to manual testing.

Improved Accuracy

This is one of the major advantages you can leverage from a smart test automation setup. It eliminates human error, especially in a complex network. Even though the chances of computer-driven errors persist, the rate of errors is reduced to a great extent. It leads to accuracy that is sure to meet the customer demands and keep them happy.

Assurance of Stability

Automates testing helps you to validate the stability of your product at the earliest phase of product development before its release. The manual stability tests often take a lot of time and can be hampered by human errors. Automated testing helps you curate a format to get automated updates of the status of the product through the relevant database.

Smart Test Automation- Challenges and Tips

With a complex smart testing process, automation comes with greater challenges. However, most of them can be resolved if you have the expertise and knowledge to implement the right strategies to get the best out of the smart test automation setup.

Some of the challenges are:

Lack of skilled professionals to handle technology-driven testing algorithms.

Not everyone has the skills to perform automated tests to the fullest. You either hire a skilled professional or train your employees to adapt to the automated testing culture.

Lack of proper planning between the teams

One crucial aspect that decides the success of automated testing is good teamwork. Your teams need to work collaboratively to ensure stability in the tests. You can try out the modular approach to achieve this, where tests are built locally over a real device or browser. The teams can then run them regularly and map out the results and coordinate in a better way.

Dynamic nature of automated tests

This is quite common, as companies are yet to inculcate agility in their processes. It is required for the successful implementation of these tests. One way to overcome this is to start with baby steps and then scale your testing process later as the situation demands.

Conclusion

Undoubtedly, smart test automation is the future of desktop/software devices. For efficient implementation of automated test systems, we at Utthunga provide you with the right resources and the right guidance. Our experienced panel is well versed with the leading technologies and has the perfect knack to pick out the right strategies that would aid your growth.

Leverage our services to stride ahead in the Industrie 4.0 era!